|

这边讲述Image Deblur的博客还算浅显易懂,而且颇有点风趣。 基本是根据图像blur的公式来进行图像复原的,文章采用的是逆卷积de-convolution的方法。 采用de-convolution有两种,一种是blind还有一种是non-blind,后一种知道了PSF(点扩散函数)进行准确的复原,而前一种是基于不断的重复得到画质更好的结果,一般情况下,重复次数越多,效果越好,当然代价是较长的运算时间。 比较著名的Richardson-Lucy和Wiener Filter都是采用逆卷积的方式进行图像复原的。 有兴趣的读者可以参考该作者的其他文章,其都用OpenCV对上面的两种方法进行了实现。 本文所采用的方法也是blind的方式,但是效果估计一般,所以就不深究了。与大家分享。 ============= 原帖URL:http://gigadom.wordpress.com/201 ... rring-using-opencv/ Experiments with deblurring using OpenCV November 9, 2011 by Tinniam V Ganesh Deblurring refers to the removal of the blur from blurred images. The removal of blur is extremely important in the fields of medical imaging, astronomy etc. In medical imaging this is also known as denoising and finds extensive applications in ultra sonic and CT images. Similarly in astronomy there is a need to denoise and deblur images that are taken by space telescopes for e.g. the Hubble telescope. Deblurring is really a tough problem to solve though it has been around for ages. While going through some of the white papers on deblurring I have been particularly fascinated by the results. The blurred images are restored back to its pristine, original sharp image. It is almost magical and is amazing to know that a computer program is able to detect and remove patches, almost bordering on “computer perception”. However the solution to the problem is very involved. Almost every white paper I read in deblurring techniques involves abstruse mathematical concepts, enough to make one break into ‘cold sweat’ as I did several times. There are several well known methods of removing blur from images. The chief among them are the Richardson-Lucy method, the Weiner filter (do read my post “Dabbling with Wiener filter using OpenCV“), Radon transform or some form Bayesian filtering etc. All of these methods perform the converse of convolution known as de-convolution. Almost all approaches are based on the following equation b(x) = k(x) * i(x) + n(x) – (1) Where b(x) – is the blurred image, i(x) is the latent (good) image, k(x) is the blur kernel also known as the Point Spread Function (PSF) and n(x) is random noise. The key fact to note in the above equation is that the blurred image (b) is a convolution of a good latent image with a blur kernel plus some additive noise. So the good latent image is convolved by a blurring function or the points spread function and results in the blurred image. Now there are 2 unknowns in the above equation, both the blur kernel and the latent image. To obtain the latent image a process known as de-convolution has to be performed. If equation (1) is converted to the frequency domain using the Fourier transform on equation (1) we have B(w) = K(w) * I(w) + N(w) Ignoring noise we have I(w) = B(w)/K(w) or I(w) = DFT [B(w)]/DFT[K(w)] Or i(t) = Inverse DFT {[DFT B(w)]/DFT[K(w)]} If DFT[K(w)] can be represented as A+iB then the above can be represented as i(t) = Inverse DFT { DFT [B(w)] * (A – iB)} ————————— A**2 + B**2 where A-iB is the complex conjugate of the DFT of the blur kernel This is known as de-convolution. There are two types of de-convolution blind and non-blind. In the non-blind de-convolution method an initial blur kernel is assumed and is used to de-blur the image. In the blind convolution an initial estimate of the blur kernel is made and the latent image is successively iterated to obtain the best image. This is based on a method known as maximum-a-posteriori (MAP) to iterate through successive estimations of better and better blur kernels. The Richardson-Lucy algorithm also tries to estimate the blur kernel from the blurred image. In this post I perform de-convolution using an estimated blur kernel. As can be seen there is a slight reduction of the blur. By choosing a large kernel probably a 100 x 100 matrix would give a better result. Kindly do share any thoughts, ideas that you have. I have included the full code for this. Feel free to use the code and tweak it as you see fit and please share any findings you come across.  Steps in the de-convolution process

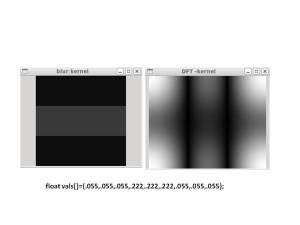

2. Perform DFT on blur kernel. Also perform inverse DFT to get back original contents. Make sure that the line for performing the inverse is commented out as it overwrites the DFT array. 2. Perform DFT on blur kernel. Also perform inverse DFT to get back original contents. Make sure that the line for performing the inverse is commented out as it overwrites the DFT array.// Perform DFT of kernel dft_B = cvShowDFT(k_image,dft_M1,dft_N1,"kernel"); //Perform inverse of kernel (check) cvShowInvDFT(k_image,dft_B,dft_M1,dft_N1,fp, "kernel");  3. Multiply the DFT of image with the complex conjugate of the DFT of the blur kernel // Multiply numerator with complex conjugate dft_C = cvCreateMat( dft_M1, dft_N1, CV_64FC2 ); 4. Compute A**2 + B**2 // Split Real and imaginary parts cvSplit( dft_B, image_ReB, image_ImB, 0, 0 ); cvPow( image_ReB, image_ReB, 2.0); cvPow( image_ImB, image_ImB, 2.0); cvAdd(image_ReB, image_ImB, image_ReB,0); 5. Divide numerator with A**2 + B**2 //Divide Numerator/A^2 + B^2 cvDiv(image_ReC, image_ReB, image_ReC, 1.0); cvDiv(image_ImC, image_ReB, image_ImC, 1.0); 6.Merge real and imaginary parts // Merge Real and complex parts cvMerge(image_ReC, image_ImC, NULL, NULL, complex_ImC); 7.Finally perform Inverse DFT cvShowInvDFT(im, complex_ImC,dft_M1,dft_N1,fp,"deblur"); 3. Multiply the DFT of image with the complex conjugate of the DFT of the blur kernel // Multiply numerator with complex conjugate dft_C = cvCreateMat( dft_M1, dft_N1, CV_64FC2 ); 4. Compute A**2 + B**2 // Split Real and imaginary parts cvSplit( dft_B, image_ReB, image_ImB, 0, 0 ); cvPow( image_ReB, image_ReB, 2.0); cvPow( image_ImB, image_ImB, 2.0); cvAdd(image_ReB, image_ImB, image_ReB,0); 5. Divide numerator with A**2 + B**2 //Divide Numerator/A^2 + B^2 cvDiv(image_ReC, image_ReB, image_ReC, 1.0); cvDiv(image_ImC, image_ReB, image_ImC, 1.0); 6.Merge real and imaginary parts // Merge Real and complex parts cvMerge(image_ReC, image_ImC, NULL, NULL, complex_ImC); 7.Finally perform Inverse DFT cvShowInvDFT(im, complex_ImC,dft_M1,dft_N1,fp,"deblur"); I have included the complete re-worked code below for the process of de-convolution. There was a small bug in the initial version. This has been fixed and the code below should work as is. I have included the complete re-worked code below for the process of de-convolution. There was a small bug in the initial version. This has been fixed and the code below should work as is.Do also take a look at my post "Reworking the Lucy Richardson algorithm in OpenCV"/* ============================================================================ Name : deconv.c Author : Tinniam V Ganesh Version : Copyright : Description : Deconvolution in OpenCV ============================================================================ */ #include <stdio.h> #include <stdlib.h> #include “cxcore.h” #include “cv.h” #include “highgui.h” int main(int argc, char ** argv) { int height,width,step,channels; uchar* data; uchar* data1; int i,j,k; float s; CvMat *dft_A; CvMat *dft_B; CvMat *dft_C; IplImage* im; IplImage* im1; IplImage* image_ReB; IplImage* image_ImB; IplImage* image_ReC; IplImage* image_ImC; IplImage* complex_ImC; IplImage* image_ReDen; IplImage* image_ImDen; FILE *fp; fp = fopen(“test.txt”,”w+”); int dft_M,dft_N; int dft_M1,dft_N1; CvMat* cvShowDFT(); void cvShowInvDFT(); im1 = cvLoadImage( “kutty-1.jpg”,1 ); cvNamedWindow(“original-color”, 0); cvShowImage(“original-color”, im1); im = cvLoadImage( “kutty-1.jpg”, CV_LOAD_IMAGE_GRAYSCALE ); if( !im ) return -1; cvNamedWindow(“original-gray”, 0); cvShowImage(“original-gray”, im); // Create blur kernel (non-blind) //float vals[]={.000625,.000625,.000625,.003125,.003125,.003125,.000625,.000625,.000625}; //float vals[]={-0.167,0.333,0.167,-0.167,.333,.167,-0.167,.333,.167}; float vals[]={.055,.055,.055,.222,.222,.222,.055,.055,.055}; CvMat kernel = cvMat(3, // number of rows 3, // number of columns CV_32FC1, // matrix data type vals); IplImage* k_image_hdr; IplImage* k_image; k_image_hdr = cvCreateImageHeader(cvSize(3,3),IPL_DEPTH_64F,2); k_image = cvCreateImage(cvSize(3,3),IPL_DEPTH_64F,1); k_image = cvGetImage(&kernel,k_image_hdr); /*IplImage* k_image; k_image = cvLoadImage( “kernel4.bmp”,0 );*/ cvNamedWindow(“blur kernel”, 0); height = k_image->height; width = k_image->width; step = k_image->widthStep; channels = k_image->nChannels; //data1 = (float *)(k_image->imageData); data1 = (uchar *)(k_image->imageData); cvShowImage(“blur kernel”, k_image); dft_M = cvGetOptimalDFTSize( im->height – 1 ); dft_N = cvGetOptimalDFTSize( im->width – 1 ); //dft_M1 = cvGetOptimalDFTSize( im->height+99 – 1 ); //dft_N1 = cvGetOptimalDFTSize( im->width+99 – 1 ); dft_M1 = cvGetOptimalDFTSize( im->height+3 – 1 ); dft_N1 = cvGetOptimalDFTSize( im->width+3 – 1 ); // Perform DFT of original image dft_A = cvShowDFT(im, dft_M1, dft_N1,”original”); //Perform inverse (check & comment out) – Commented as it overwrites dft_A //cvShowInvDFT(im,dft_A,dft_M1,dft_N1,fp, “original”); // Perform DFT of kernel dft_B = cvShowDFT(k_image,dft_M1,dft_N1,”kernel”); //Perform inverse of kernel (check & comment out) – commented as it overwrites dft_B //cvShowInvDFT(k_image,dft_B,dft_M1,dft_N1,fp, “kernel”); // Multiply numerator with complex conjugate dft_C = cvCreateMat( dft_M1, dft_N1, CV_64FC2 ); printf(“%d %d %d %d\n”,dft_M,dft_N,dft_M1,dft_N1); // Multiply DFT(blurred image) * complex conjugate of blur kernel cvMulSpectrums(dft_A,dft_B,dft_C,CV_DXT_MUL_CONJ); // Split Fourier in real and imaginary parts image_ReC = cvCreateImage( cvSize(dft_N1, dft_M1), IPL_DEPTH_64F, 1); image_ImC = cvCreateImage( cvSize(dft_N1, dft_M1), IPL_DEPTH_64F, 1); complex_ImC = cvCreateImage( cvSize(dft_N1, dft_M1), IPL_DEPTH_64F, 2); printf(“%d %d %d %d\n”,dft_M,dft_N,dft_M1,dft_N1); //cvSplit( dft_C, image_ReC, image_ImC, 0, 0 ); cvSplit( dft_C, image_ReC, image_ImC, 0, 0 ); // Compute A^2 + B^2 of denominator or blur kernel image_ReB = cvCreateImage( cvSize(dft_N1, dft_M1), IPL_DEPTH_64F, 1); image_ImB = cvCreateImage( cvSize(dft_N1, dft_M1), IPL_DEPTH_64F, 1); // Split Real and imaginary parts cvSplit( dft_B, image_ReB, image_ImB, 0, 0 ); cvPow( image_ReB, image_ReB, 2.0); cvPow( image_ImB, image_ImB, 2.0); cvAdd(image_ReB, image_ImB, image_ReB,0); //Divide Numerator/A^2 + B^2 cvDiv(image_ReC, image_ReB, image_ReC, 1.0); cvDiv(image_ImC, image_ReB, image_ImC, 1.0); // Merge Real and complex parts cvMerge(image_ReC, image_ImC, NULL, NULL, complex_ImC); // Perform Inverse cvShowInvDFT(im, complex_ImC,dft_M1,dft_N1,fp,”deblur”); cvWaitKey(-1); return 0; } CvMat* cvShowDFT(im, dft_M, dft_N,src) IplImage* im; int dft_M, dft_N; char* src; { IplImage* realInput; IplImage* imaginaryInput; IplImage* complexInput; CvMat* dft_A, tmp; IplImage* image_Re; IplImage* image_Im; char str[80]; double m, M; realInput = cvCreateImage( cvGetSize(im), IPL_DEPTH_64F, 1); imaginaryInput = cvCreateImage( cvGetSize(im), IPL_DEPTH_64F, 1); complexInput = cvCreateImage( cvGetSize(im), IPL_DEPTH_64F, 2); cvScale(im, realInput, 1.0, 0.0); cvZero(imaginaryInput); cvMerge(realInput, imaginaryInput, NULL, NULL, complexInput); dft_A = cvCreateMat( dft_M, dft_N, CV_64FC2 ); image_Re = cvCreateImage( cvSize(dft_N, dft_M), IPL_DEPTH_64F, 1); image_Im = cvCreateImage( cvSize(dft_N, dft_M), IPL_DEPTH_64F, 1); // copy A to dft_A and pad dft_A with zeros cvGetSubRect( dft_A, &tmp, cvRect(0,0, im->width, im->height)); cvCopy( complexInput, &tmp, NULL ); if( dft_A->cols > im->width ) { cvGetSubRect( dft_A, &tmp, cvRect(im->width,0, dft_A->cols – im->width, im->height)); cvZero( &tmp ); } // no need to pad bottom part of dft_A with zeros because of // use nonzero_rows parameter in cvDFT() call below cvDFT( dft_A, dft_A, CV_DXT_FORWARD, complexInput->height ); strcpy(str,”DFT -”); strcat(str,src); cvNamedWindow(str, 0); // Split Fourier in real and imaginary parts cvSplit( dft_A, image_Re, image_Im, 0, 0 ); // Compute the magnitude of the spectrum Mag = sqrt(Re^2 + Im^2) cvPow( image_Re, image_Re, 2.0); cvPow( image_Im, image_Im, 2.0); cvAdd( image_Re, image_Im, image_Re, NULL); cvPow( image_Re, image_Re, 0.5 ); // Compute log(1 + Mag) cvAddS( image_Re, cvScalarAll(1.0), image_Re, NULL ); // 1 + Mag cvLog( image_Re, image_Re ); // log(1 + Mag) cvMinMaxLoc(image_Re, &m, &M, NULL, NULL, NULL); cvScale(image_Re, image_Re, 1.0/(M-m), 1.0*(-m)/(M-m)); cvShowImage(str, image_Re); return(dft_A); } void cvShowInvDFT(im, dft_A, dft_M, dft_N,fp, src) IplImage* im; CvMat* dft_A; int dft_M,dft_N; FILE *fp; char* src; { IplImage* realInput; IplImage* imaginaryInput; IplImage* complexInput; IplImage * image_Re; IplImage * image_Im; double m, M; char str[80]; realInput = cvCreateImage( cvGetSize(im), IPL_DEPTH_64F, 1); imaginaryInput = cvCreateImage( cvGetSize(im), IPL_DEPTH_64F, 1); complexInput = cvCreateImage( cvGetSize(im), IPL_DEPTH_64F, 2); image_Re = cvCreateImage( cvSize(dft_N, dft_M), IPL_DEPTH_64F, 1); image_Im = cvCreateImage( cvSize(dft_N, dft_M), IPL_DEPTH_64F, 1); //cvDFT( dft_A, dft_A, CV_DXT_INV_SCALE, complexInput->height ); cvDFT( dft_A, dft_A, CV_DXT_INV_SCALE, dft_M); strcpy(str,”DFT INVERSE – “); strcat(str,src); cvNamedWindow(str, 0); // Split Fourier in real and imaginary parts cvSplit( dft_A, image_Re, image_Im, 0, 0 ); // Compute the magnitude of the spectrum Mag = sqrt(Re^2 + Im^2) cvPow( image_Re, image_Re, 2.0); cvPow( image_Im, image_Im, 2.0); cvAdd( image_Re, image_Im, image_Re, NULL); cvPow( image_Re, image_Re, 0.5 ); // Compute log(1 + Mag) cvAddS( image_Re, cvScalarAll(1.0), image_Re, NULL ); // 1 + Mag cvLog( image_Re, image_Re ); // log(1 + Mag) cvMinMaxLoc(image_Re, &m, &M, NULL, NULL, NULL); cvScale(image_Re, image_Re, 1.0/(M-m), 1.0*(-m)/(M-m)); //cvCvtColor(image_Re, image_Re, CV_GRAY2RGBA); cvShowImage(str, image_Re); } |

[技术| 编程·课件·Linux] [Image Deblur] Experiments with deblurring using Ope ...

admin

· 发布于 2012-12-11 17:42

· 3131 次阅读

转载文章时务必注明原作者及原始链接,并注明「发表于 软院网 RuanYuan.Net 」,并不得对作品进行修改。

暂无回复。